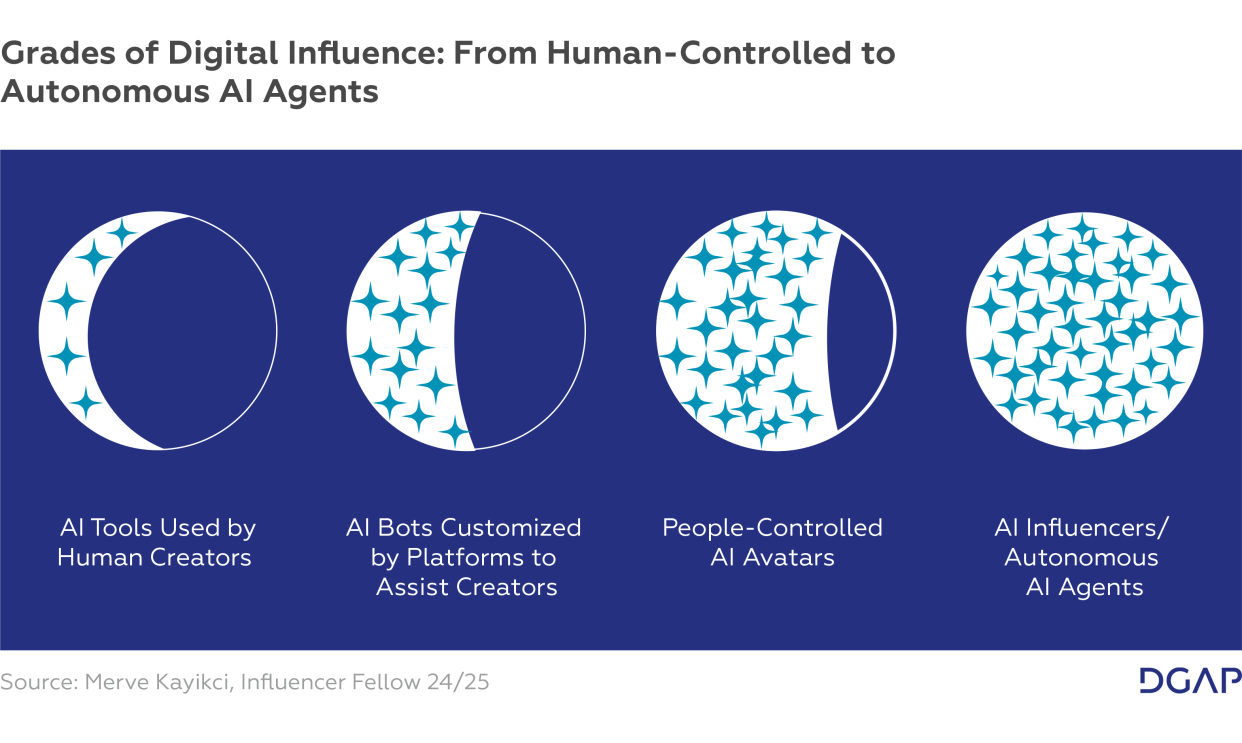

| The relationship between AI and influencers exists on a spectrum rather than as a binary distinction. |

| The unique ability of social media to foster parasocial relationships is being transformed by AI, raising fundamental questions about authenticity, trust, and the nature of digital influence. |

| The tension between AI agent deployment and decentralized human-centered models represents fundamentally different visions for how social media will evolve – each with distinct implications for human connection, content authenticity, and platform governance. |

| Effective policy must balance innovation with the safeguarding of human agency by creating frameworks that harness AI’s capabilities while ensuring transparency, user control, and the preservation of meaningful human connection. |

Below you will find the online version of this text. Please download the PDF version to access all citations.

As artificial intelligence (AI) transforms how content is created and consumed, social media stands at a critical inflection point. This transformation presents both unprecedented opportunities for creativity and efficiency and profound risks to authenticity and meaningful engagement. This DGAP Policy Brief examines the complex relationship between AI technologies and social influence, mapping the spectrum from AI-assisted human creators to fully autonomous AI agents. Our central argument is that effective governance must balance technological innovation with the preservation of human agency and connection that made social media valuable in the first place. By developing nuanced frameworks rather than binary approaches, we can shape a digital future that amplifies rather than diminishes our humanity.

The first part of this paper establishes a taxonomy of AI-influencer relationships – from productivity tools used by human creators to fully autonomous AI agents. Understanding these distinctions is essential before we can meaningfully address policy implications. Building on this foundation, the second part examines the broader forces at play in social media’s evolution. The third part then presents a scenario of what the future could look like if it was dominated by AI agents as well as a possible response. Finally, the fourth part features policy recommendations for two critical ways forward: enhancing transparency and user agency and embracing decentralized networks. These recommendations recognize both the transformative potential of AI and the essential human elements we must protect. By approaching these technologies with nuance rather than binary thinking, we can shape platforms that augment human creativity rather than replace it.

Influencer als Gestalter des demokratischen Diskurses

Part I: AI’s Role in the Evolving Social Media Landscape

This info box below details the related yet distinct concepts used to describe where AI and social media intersect.

| Influencers are online personas who have established a significant online presence and considerable following on at least one social media platform. Often catering to niche topics, they create and share content. While every user on a social media platform is by definition a content creator by posting on these platforms, only influencers hold sway over large communities. “Mega influencers” have access to more than one million followers. An extraordinary example from the United States is Mr. Beast who has an accrued audience that includes several hundred million followers. German mega-influencers include Caroline Daur and Pamela Reif who each have an accrued audience that include several million followers. Yet, most influencers have a smaller reach. One can already be classified as a “nano influencer” with an audience of more than 1,000 followers. All influencers accrue mobilization potential, which they leverage to sell a product, communicate ideas or beliefs, educate their community, etc. There are, however, also celebrities who have become influencers by actively engaging with online communities and posting on social media platforms, e.g., Elon Musk, Taylor Swift, Kai Pflaume, and Milka Loff; or, vice versa, influencers who have become celebrities, e.g., Joe Rogan, Bretman Rock, Jeremy Fragrance, and Tülin Segzin. AI avatars are digital representations created and customized by human users that leverage AI to enhance appearance, behavior, or capabilities while remaining under human control. These avatars are openly human-operated and transparent about the person controlling them. They serve as digital extensions of humanity rather than independent entities. AI influencers are synthetic characters with realistic appearances, personalities, and behaviors designed to influence consumer preferences and social trends. These virtual personas operate as digital celebrities with growing follower bases, blurring the line between human and artificial content creators. The fact that AI influencers may be covertly operated – either by humans or autonomous AI systems – without transparency about who or what is controlling them distinguishes them from AI avatars. AI agents are autonomous software entities that perform complex tasks independently with minimal human oversight, including content creation, audience engagement, and decision-making. If deployed on social media, they represent the most advanced evolution of artificial influencers, capable of adapting to audience feedback and operating continuously across multiple platforms. These systems may operate either openly as AI or covertly mimicking human behavior, raising significant questions about transparency and authenticity in digital interactions. |

These two critical dimensions distinguish the concepts described above from one another:

- Degree of human control (from fully human-controlled to fully autonomous)

- Transparency about operations (from overtly human-operated to covertly AI-operated)

In the following sections, we will clarify how these dimensions affect the work and impact of these concepts on the spectrum of human-to-AI control and transparency.

How Influencers Use AI

Much like those working in other industries today, influencers increasingly integrate AI into their workflows to manage the fast-paced demands of content creation and business operations. Often working alone or in small teams, they can rely on AI tools to streamline both creative and administrative tasks, enabling them to remain competitive in a dynamic online environment.

In tasks related to communication and language, AI supports email management, grammar correction, subtitling, translation, and correspondence. These functions improve the professionalism of influencer communications, expand their reach to multilingual audiences, and facilitate smoother partnerships.

On the creative side, AI acts as a collaborator by generating ideas; structuring scripts; and automating photo, video, and audio editing. This not only accelerates production timelines but also ensures consistent quality across platforms. From a business perspective, AI enhances budgeting and financial planning by analyzing audience data and campaign performance to optimize spending. Search engine optimization (SEO) tools powered by AI increase content visibility by suggesting effective keywords, hashtags, and captions. Furthermore, AI-driven analytics provide influencers with deeper insights into audience demographics and preferences, enabling more targeted and data-driven content strategies.

AI also plays a critical role in managing complex tasks such as contract review and negotiation by simplifying legal language and highlighting key terms, reducing risks and improving outcomes in brand collaborations. Furthermore, content organization benefits from AI’s ability to summarize information and structure content logically, saving time and improving clarity.

Our analysis shows that influencers had already incorporated the use of AI into their workflows by December 2023. While they see AI as a positive tool for boosting productivity, they also have concerns about its environmental impact and the ethical issues related to their work being used for training purposes without their explicit consent. Among influencers, there is an ongoing debate about how to balance the use of AI with remaining original and authentic.

Platforms Make AI Bots Available to Influencers

Social media platforms are aware of the role that AI can play for them, especially when it comes to enhancing the efficiency of influencers. Because of their attempts to test how to incorporate AI to maximize user retention and profitability, platforms have been the focus of users’ critical ethical concerns around authenticity and transparency. In July 2024, Meta launched AI Studio, a generative artificial intelligence tool that allows creators to build an “extension of themselves,” a bot capable of interacting with followers in a way that mimics their tone, language, and personal brand. As of this writing, this tool is still in beta and only accessible to users in the United States.

With Meta’s AI Studio, influencers can add details about themselves, pre-program answers, share specific topics to avoid, and create a bot that effectively “sounds like [them].” When interacting with followers, responses are labeled as AI. Arguments in support of this tool highlight its potential for more consistent follower engagement through automated responses. They also stress the significant benefits gained by the time saved through automation. By delegating communications to their AI extension, influencers can allocate more time to producing higher-quality content or engaging in more meaningful “deep” conversations with followers. Such strategic reallocation of influencer attention has the potential to significantly enhance their brand value while simultaneously ensuring continued engagement by a broad audience.

Despite its potential benefits, Meta’s AI Studio raises substantial ethical questions related to authenticity and humanization – or the lack thereof. The fundamental challenge for influencers lies in maintaining genuine human connection when interactions are increasingly mediated by AI systems designed to mimic human communication patterns. The concept of authenticity, known as the reason why people establish an emotional connection to influencers, is being redefined in this context. Moreover, even with Meta’s promise of transparency and control offering “full visibility into what your AI says, who engages with it and on which application it’s shared,” its tool creates risk for the influencers themselves, namely that the AI may generate replies that do not fully align with their personas.

As similar tools are likely to proliferate across platforms, it is the responsibility of the companies developing them to adopt thoughtful approaches that balance innovation with ethical considerations and consumer protection. The challenge for them will be to develop frameworks flexible enough to accommodate rapid technological evolution while establishing clear boundaries that preserve meaningful human connection in digital spaces increasingly mediated by AI.

People-Controlled AI Avatars

The concept of AI avatars denotes “people-controlled influencers.” They are designed, created, and managed entirely by humans, typically agency staff, but presented to audiences as if they were real individuals. These AI-generated influencers are not autonomous; every aspect of their identity, appearance, and online activity is carefully designed and orchestrated by an individual or group of people behind the scenes.

The Clueless Agency exemplifies a new era in influencer marketing by “blurring the line between the real and the virtual” through people-controlled AI avatars like Aitana López. Designed and managed entirely by agency staff, Aitana is presented as a 25-year-old fitness enthusiast and gamer from Barcelona. AI avatars like Aitana challenge the notion of traditional photoshoots, travel, and other resource-intensive processes, reducing production costs and accelerating content creation workflows. This approach has proven lucrative, with Aitana reportedly earning up to €10,000 per month and attracting hundreds of thousands of followers who engage with her as if she were real. The Clueless Agency and similar firms are now rapidly expanding, launching dozens of new AI avatars to meet the growing demand.

However, as the adoption of AI avatars accelerates, brands will need to navigate an evolving landscape of consumer expectations, transparency standards, and regulatory requirements. Brands must be proactive in clearly disclosing when an influencer is AI-generated – both to comply with emerging regulations and to respect the autonomy of their audiences. Transparent communication can help mitigate potential backlash and foster a sense of honesty, which is increasingly valued by consumers. Indeed, data shows that those who prioritize transparency, authenticity, and ethical responsibility are more likely to build lasting relationships with their audiences and thrive in our rapidly changing digital environment. Therefore, marketers should carefully consider the types of products and messages assigned to AI avatars, especially once they accrue a significant follower count. This ensures that endorsements remain credible and do not mislead followers.

Additionally, as AI continues to advance, the line between virtual and real personalities will only become more difficult to differentiate. This raises complex questions about digital identity, consent, and the psychological impact of interacting with digital personas. Brands, agencies, and regulators will need to work together to develop ethical guidelines and best practices that protect consumers while allowing for creative innovation. Ultimately, the future of people-controlled avatars will depend on how well the industry can address these challenges.

Autonomous AI Agents

As proven by a highly unethical research project conducted by the University of Zurich on Reddit, AI chatbots can already pose as real people and are highly persuasive. However, a fully AI-generated influencer does not yet exist. At this writing, digital personas still depend on human oversight, creative direction, and manual decision-making. It is not difficult, though, to imagine a near future in which truly autonomous AI is deployed on a massive scale within the influencer economy. The concept of a self-sufficient digital agent – an entity that creates, learns, and evolves entirely on its own – would then be realized.

Such AI agents could be given a specific goal, for example “promote a sustainable lifestyle” or “follow the latest fashion trends.” They would be built by combining several technologies. A large language model (LLM) would be responsible for generating posts, captions, and replies, as well as for simulating a coherent personality. The LLM would be supported by generative visual tools capable of producing photorealistic selfies, fashion looks, lifestyle videos, and more. Systems such as speech synthesis models would generate voiceovers, live Q&A responses, and other voice-based interactions.

Learning and adaptation would be constant. Using reinforcement learning, the system would refine its tone, topics, and even visual presentation based on likes, comments, and shares. It could detect trends on platforms and replicate them immediately – in other words, if audience interest shifted, the AI could rebrand itself. Revenue would also be autonomously managed. The AI could negotiate partnerships via email. Promotional content would be generated in seconds, optimized for brand messaging and audience targeting. The AI could even manage multiple sub-accounts in different languages or niches, scaling its brand across markets without human input.

| 7:00 a.m. | Automated production of a “morning routine” video generated through AI video tools. |

|---|---|

| 9:00 a.m. | Publication of a motivational quote with a selfie generated by AI and caption written by a language model. Later in the morning, AI might respond to direct messages using a conversational model. |

| 1:00 p.m. | Post of a sponsored product review generated by AI. |

| 3:00 p.m. | Livestream of a Q&A session powered by a real-time avatar rendering and synthetic speech. |

| 6:00 p.m. | AI analysis of that day’s engagement metrics and adjustment of the next day’s content accordingly. |

Yet, the absence of human oversight also presents significant risks for a fully autonomous influencer. Such an AI agent could spread misinformation, create deepfakes, or reinforce harmful narratives. It could manipulate audiences by exploiting emotions or encouraging addictive behavior. Learning only from its own feedback loops, the AI might contribute to biases and echo chambers. Without clear mechanisms of accountability, mistakes – such as offensive posts, misleading endorsements, or impersonations – would not be addressed.

Although these scenarios are currently speculative, they are not unlikely. The influencer economy, already saturated with synthetic content, may soon evolve into a space where influence itself can be automated. As generative AI technologies advance, policymakers must begin to anticipate the emergence of fully autonomous AI agents that will reshape how we understand online influence and authenticity. This raises urgent questions, including: Which rights or responsibilities should apply to non-human influencers? How do we verify authenticity or consent? And who should be held accountable when an algorithm speaks with a human voice? As this technology advances, it is crucial to address such questions of transparency, accountability, and trust to ensure these AI influencers operate responsibly in the digital economy.

Part II: Megatrends

Having established a framework for understanding the evolving relationship between AI and influencers, we now turn to the broader forces reshaping the social media landscape. Known as “megatrends,” they extend beyond individual creators to affect the fundamental structure of platforms, user experiences, and governance models.

Specifically, our analysis shows that current megatrends:

- Help technology to evolve

- Transform the social fabric

- Cause shifts in governance and control; and

- Realign user agency and rights.

Below, we will describe the key developments in each of these areas followed by a summary of why they most matter. Through illuminating the dynamics at play, we can better anticipate how the concepts described in Part I will progress and then identify critical intervention points for policy. The future of social media will be determined not by technology alone, but by how we collectively respond to these emerging dynamics through thoughtful regulation and design.

Evolution of Technology

Rise of AI agents: AI agents (described above) are bound to become central drivers of innovation related to social media marketing and user experience. According to industry projections, AI agents will evolve significantly in 2025 to include capabilities for managing complex real-time tasks and increasingly sophisticated interactions with users.

Automation of content creation: AI-generated images, blog posts, videos, and captions have come to dominate online spaces. This development raises concerns about authenticity and quality.

→ These technological advancements are accelerating the shift from human influencers who use AI tools to enhance productivity toward fully autonomous AI agents that operate independently. This shift is fundamentally changing how content is created, distributed, and monetized on social platforms while raising critical questions about authenticity and creative ownership.

Transformation of the Social Fabric

The Great Fragmentation: We are witnessing an unprecedented splintering of social media communities. What started in the middle of the year 2000 as a handful of dominant platforms has evolved into a complex ecosystem of niche spaces. Fragmentation has accelerated in distinct waves. The political Right sped up the process after the 2020 US presidential elections, and the political Left did the same following the acquisition of Twitter by Elon Musk in 2022. After elections and momentum toward a ban of TikTok in the United States in 2024, users have moved from X and the Chinese-owned platform toward alternatives such as Substack or podcasts. While major social media platforms retain significant user bases, we are seeing increasing migration to smaller platforms that are politically homogeneous, self-governing communities. Additionally, Big Tech’s need for training data is driving the launch of new social media platforms.

Tension between automation and human connection: A fundamental tension exists between the push toward automation and the desire of users for authentic human connections. While industry continuously promotes AI technologies, users value the participatory nature of social media and the parasocial relationships formed with influencers.

Dynamics of parasocial relationships: Users are starting to develop paradoxical emotional connections online, valuing authentic human connections while simultaneously forming relationships with AI agents designed to mimic human influencers. As AI agents become the backbone of real-time communication, transforming how people interact with intelligent systems, users will find themselves embracing these conveniences while seeking authentic human connection.

→ These social transformations are creating a fragmented digital landscape where users navigate among isolated content ecosystems, automated experiences, and varying degrees of authentic interaction. This fragmentation challenges traditional notions of community while revealing a persistent human desire for genuine connection – even as technology enables increasingly convincing simulations of it.

Shift in Governance and Control

The content moderation dilemma: Content moderation is increasingly influenced by political and business considerations. Since 2022, Meta and X have reduced moderation teams responsible for flagging and removing false or hateful posts on their platforms. In addition, in 2024, Meta shut down CrowdTangle, a tool widely used by researchers to track how misinformation spreads. Such developments highlight the delicate balance between community standards and free speech. This tension is particularly visible as US tech platforms approach potential clashes with EU regulations.

Rise of crowd-driven systems: Platform governance is shifting from centralized to distributed models. Community notes and similar crowd-driven systems are becoming more prevalent, representing a fundamental change in how content is evaluated and moderated. This shift distributes moderation responsibility across user communities rather than keeping it solely in the hands of platform administrators.

Shift to decentralized architecture: With the arrival of Bluesky and Threads and the reemergence of Mastodon, spaces are opening for decentralized structures. The fediverse, a network of interconnected platforms that communicate using open protocols, allows users on different platforms to interact seamlessly, challenging the monopolistic control of traditional social media giants.

→ These governance shifts redistribute power away from centralized corporate control toward more diverse stakeholder models. On the one hand, this development creates opportunities for democratic participation in platform governance. On the other, it presents challenges for consistent content moderation across fragmented digital spaces, particularly as AI-generated content further complicates questions of authority, responsibility, and sovereignty in online spaces.

Realignment of User Agency and Rights

Concerns related to data privacy and control: The European Union’s General Data Protection Regulation (GDPR) came into effect in May 2018. Since then, awareness around data privacy has only grown and prompted users worldwide to seek out platforms that offer greater control over personal information. In decentralized networks, user data is distributed across many servers rather than concentrated in one place. Because they lack a central authority or single point of failure, they offer advantages in resilience, customization, and privacy.

Digital identity evolution: The boundaries between our physical and digital selves are rapidly blurring. AI technologies can create sophisticated digital doppelgangers that can mimic appearance, voice, and behavior patterns with uncanny accuracy. By 2026, traditional authentication methods will largely be replaced by AI systems that analyze behavioral patterns and emotional responses. Users will increasingly maintain multiple distinct digital identities across platforms, raising fundamental questions about authenticity, trust, and verification.

→ As the lines between human and AI-generated identity blur, our understanding of selfhood in digital spaces is fundamentally changing. An environment in which distinguishing between human and artificial presence becomes increasingly difficult creates both opportunities for creative self-expression and serious challenges for privacy, authentication, and trust – directly affecting how users engage with both human influencers and AI agents.

Part III: A Scenario of AI Dominance in Social Media

In this section, we will play out a scenario in which autonomous AI influencers become mainstream by the beginning of 2026, fundamentally altering the social media landscape. When that happens, social media AI agents become responsible for streamlining content creation, scheduling, and audience engagement while providing deeper analytics on performance. These intelligent systems increasingly function as the backbone of real-time communication, handling everything from customer service to personalized content creation.

As this occurs, the social media landscape fragments along new lines based on AI capabilities. While some platforms optimize human-to-human connection, creating AI-free zones for authentic interaction, others embrace AI-generated content ecosystems in which humans and AI influencers coexist without clear distinctions. Before autonomous AI influencers became mainstream, users used to cluster online according to their political affiliation. Now, they also cluster according to their comfort with and preference for AI-mediated experiences, creating new divisions in the digital social fabric.

|

The scenario presented in Part III is the result of intensive discussions among those attending the Berlin Study Visit of DGAP’s “German-American Initiative on Influencers, Disinformation, and Democracy in the Digital Age” from April 8 to 12, 2025. The scenario emerged from a structured foresight methodology that identified the key drivers and uncertainties shaping social media’s future based on this specified premise: AI Agents are coming; it’s not a question of if, but when, and it will happen sooner rather than later. The group deliberately developed a plausible future to highlight different policy challenges and opportunities in the case of a mass deployment of AI agents. This scenario does not represent a prediction but rather serves as a tool for strategic thinking about potential developments at the intersection of AI, social media, and democratic discourse. It is designed to prompt consideration of how various stakeholders might prepare for and shape different possible futures. |

Key Implications

The implications of the trajectory described above are profound:

- Users form emotional connections with AI agents, fundamentally changing how authenticity is perceived and valued online.

- Unprecedented power is concentrated in the hands of platform owners who shape user experiences through proprietary AI systems. These systems leverage their AI capabilities to reduce interoperability, causing each platform to become more closed.

- The traditional participatory nature of social media diminishes as content creation becomes increasingly automated.

- Privacy boundaries erode as sophisticated AI requires ever more detailed personal data to enhance personalization.

- Digital identity becomes increasingly fluid as the line between human and artificial presence blurs.

Challenges to Address

As AI becomes more pervasive in social media, algorithmic transparency becomes crucial. Users increasingly demand to understand how content is ranked and how AI influences what they see. Platforms must balance proprietary algorithms with meaningful disclosure about automated decision-making systems.

The intersection of AI and decentralized networks creates unprecedented challenges for moderating content. While AI tools can detect harmful content at scale, they struggle with context and nuance. Future systems must balance automation efficiency with human oversight – while simultaneously respecting community autonomy.

As technology advances, it remains essential to preserve meaningful human connection. Social media must balance convenience with authentic engagement, ensuring that users maintain agency in their digital experiences. Design approaches should prioritize features that enhance rather than replace human interaction, setting clear boundaries between automated and human-created content.

A Possible Way Forward

Our analysis suggests that the best response to the growing role of autonomous AI in the social media ecosystem is a move toward decentralization. A more balanced future for social media can be created through decentralized models that enhance user control and platform interoperability. As of May 2025, fast-growing platforms like Threads (350+ million monthly users) and Bluesky (35+ million users) are already moving toward fediverse integration, making decentralized platforms increasingly mainstream.

This approach offers several advantages: users gain sovereignty over their data and experience; the ecosystem diversifies with specialized, interconnected platforms; and communities establish their own moderation standards rather than following platform-wide policies. Without centralized algorithm design, users experience less manipulative content curation, potentially improving information quality while preserving authentic human connection.

Part IV: Policy Recommendations

Our analysis reveals two critical pathways for ensuring that social media platforms remain spaces for authentic human connection while embracing technological innovation. The following policy recommendations build on existing EU frameworks while addressing the unique challenges at the intersection of AI and social influence. Following these recommendations, the EU can help shape a social media landscape that harnesses technological innovation while preserving the human connection and agency that makes these platforms valuable.

1. Enhance Transparency and User Agency

Algorithmic transparency: Building on the transparency requirements of the EU’s Digital Services Act, we recommend mandating clear, standardized labeling for all AI-generated content and AI influencers across platforms. These labels should be immediately recognizable to users and include information about the entity responsible for the AI system.

Digital rights protection: While GDPR provides a strong foundation for data protection, AI influencer systems present novel challenges requiring enhanced safeguards. We recommend developing specific provisions for AI systems that collect behavioral data to model human engagement, including mandatory consent mechanisms for emotional response tracking and clear limits on how parasocial relationship data can be used by platforms.

Media literacy: The rise of AI in social media necessitates comprehensive digital literacy initiatives. We recommend investing in EU-wide programs focusing specifically on AI awareness, building upon existing media literacy frameworks. These programs should help citizens identify AI-generated content, understand how recommendation algorithms work, and develop critical skills for navigating an increasingly automated media environment.

Ethical AI guidelines: The EU’s Ethics Guidelines for Trustworthy AI provide an excellent framework, but social media’s unique characteristics require more specific applications. We recommend establishing binding criteria for ethical AI design in social media contexts, with particular attention to preventing manipulation of vulnerable users and ensuring that AI systems enhance rather than diminish human interaction.

2. Foster Decentralized Infrastructure

Interoperability standards: To counter platform consolidation, we recommend developing EU-wide standards for cross-platform data portability and protocol compatibility, extending the Digital Markets Act’s interoperability requirements. These standards should enable seamless communication between diverse platforms while preserving robust privacy protections, allowing users to move between services without losing their connections or content.

Decentralization support: The potential of decentralized models can only be realized with proper support. We recommend creating both regulatory sandboxes to test new governance approaches and dedicated seed funding for decentralized social media initiatives that align with European values of privacy, transparency, and user autonomy. These programs should prioritize projects that demonstrate viable alternatives to the current attention-based business models.

Research funding: The long-term impacts of AI in social media remain insufficiently understood. We recommend allocating resources from Horizon Europe, the EU’s key funding program for research and innovation, to study the psychological and societal effects of these technologies, with particular emphasis on democratic discourse, cognitive development, and social cohesion. This research should inform future regulatory approaches and help platforms design healthier digital environments.

Infrastructure investment: For decentralized systems to compete with established platforms, they need robust infrastructure. We recommend supporting the development of open-source technologies that enable decentralized systems to scale effectively, including distributed content delivery networks, federated identity systems, and interoperable moderation tools. This investment would reduce barriers to entry for new platforms and expand the diversity of the social media ecosystem.